How to Share Terraform State

Terraform state sharing centralizes infrastructure deployment data for team collaboration. It enables secure sharing of outputs between configurations, streamlining interconnected infrastructure management.

Terraform state is how your code understands the infrastructure it controls, acting as a record of deployed resources. When multiple teams or configurations need to interact, sharing this state effectively becomes a core requirement for collaboration. This article will explain Terraform state and detail the methods for secure sharing across different deployments.

What is Terraform State?

At its core, Terraform state is a snapshot of your infrastructure. When you run terraform apply, Terraform records the mapping between your configuration and the real-world resources it created. This state file, typically named terraform.tfstate, is essential for Terraform to:

- Track resources: Know which resources it manages.

- Plan changes: Understand the current state of your infrastructure to determine what needs to be created, updated, or destroyed.

- Manage metadata: Store resource attributes and dependencies.

Without a valid state file, Terraform would have no idea what it's managing, leading to potential resource duplication or accidental destruction, commonly know as drift.

What are Dependencies Between Terraform State Files?

While a single Terraform configuration often generates a single state file, real-world deployments rarely live in isolation. Often, one set of Terraform state files depends on another. For example:

- A networking team might deploy a VPC and subnets, with the details stored in a Terraform state file.

- An application team might then deploy EC2 instances into those subnets, the detaisl stored in a different Terraform state file.

- A database team might deploy an RDS instance, and the application team needs its endpoint, which the details of it are stored in the state file.

In these scenarios, the output of one Terraform deployment (e.g., VPC ID, subnet IDs, database endpoint) becomes an input for another. This is where dependencies between Terraform state files emerge. Without a mechanism to share these outputs, each team would have to manually discover and input these values, which is error-prone and defeats the purpose of IaC.

To share outputs frome one workspace to another, the output must be defined in the Terraform code itself:

# Define a resource, for example, an AWS S3 bucket

resource "aws_s3_bucket" "my_bucket" {

bucket = "my-example-bucket-12345"

acl = "private"

tags = {

Environment = "Dev"

Project = "TerraformExamples"

}

}

# Define an output for the S3 bucket's ID

output "bucket_id" {

description = "The ID of the S3 bucket"

value = aws_s3_bucket.my_bucket.id

}

Use Cases: When to Share State Between Terraform Deployments

Sharing Terraform state becomes invaluable in several common scenarios:

Modular Infrastructure: When you break down your infrastructure into logical, independent Terrafor, modules (e.g., network module, compute module, database module). Each module and deployment of it manages its state, but outputs from one are consumed by others.

Multi-Team Environments: As illustrated above, different teams own different pieces of the infrastructure, but their components need to interact. Such as network team deploys all network related infrastructure, but application teams can read the Terraform state files from the network deployments.

Environments (Dev, Staging, Prod): While each environment typically has its own distinct state, there might be shared foundational elements (e.g., a core shared services VPC) whose state needs to be accessible across environments.

Centralized Data: If you have common data points like a shared S3 bucket name or an IAM role ARN that multiple deployments need to reference.

Terraform State: Security Best Practices

Sharing Terraform state, while powerful, introduces security considerations that must be addressed:

Sensitive Data: Terraform state can contain sensitive information like database passwords, API keys, or private IP addresses.

- Never commit

terraform.tfstatefiles directly to version control. - Utilize remote backends (like S3, Azure Blob Storage, GCS) that encrypt state at rest.

- Employ secret management tools (e.g., AWS Secrets Manager, Azure Key Vault, HashiCorp Vault) to store and retrieve sensitive values, referencing them in your Terraform configuration rather than hardcoding them or storing them in plain text in the state file. Terraform's

data "aws_secretsmanager_secret"or similar data sources are your friends here.

Access Control (Least Privilege): Not everyone needs access to all state files.

- Implement strict IAM policies (AWS), RBAC (Azure/GCP), or workspace permissions (Terraform Cloud/Scalr) to control who can read, write, and modify state.

- Differentiate read-only access, for consuming outputs, from write access, for managing resources.

State Locking: Concurrent operations on the same state file can lead to corruption.

- Ensure your remote backend supports state locking to prevent multiple users or processes from writing to the state simultaneously. Most cloud storage backends and dedicated Terraform platforms, like Terraform Cloud or Scalr offer state locking.

State History and Rollback:

- Leverage remote backends that provide versioning for your state files. This allows you to revert to a previous working state if a deployment goes wrong.

Auditing:

- Enable logging and auditing for your state backend to track who accessed and modified the state files. Again, this is where dedicated Terraform platforms really help.

How to Share State When using Terraform

When you're operating purely with open-source Terraform, without a managed platform like Terraform Cloud or Scalr, state management and sharing become your responsibility. The core mechanism for sharing state is through remote backends. Terraform supports various remote backends, primarily cloud storage services, such as S3, which allow you to store your terraform.tfstate file in a centralized, accessible location.

This approach requires you to manually configure the backend and often set up supporting services for state locking.

Configuring a Remote Backend (e.g., S3, Azure Blob Storage, GCS)

The most common and recommended way to share state in an open-source setup is by using cloud object storage. These services offer high durability, versioning, and often built-in locking mechanisms.

General Steps:

- Choose a Backend: Select a cloud storage service like Amazon S3, Azure Blob Storage, or Google Cloud Storage.

- Create the Storage Resource: Manually create the necessary bucket/container in your chosen cloud provider before running

terraform init. - Configure Terraform Backend: Add a

backendblock to your root module'smain.tf(or a dedicatedbackend.tffile).

1. Set the Backend in the Code

AWS S3:

Prerequisites:

- An S3 bucket named

my-terraform-state-bucket(with versioning enabled for recovery). - A DynamoDB table named

terraform-lock-tablewith a primary key ofLockID(string type). This table is used by Terraform for robust state locking. - Appropriate IAM permissions for the users/roles running Terraform to access the S3 bucket and DynamoDB table.

Example code snippet:

terraform {

backend "s3" {

bucket = "my-terraform-state-bucket"

key = "path/to/my/terraform.tfstate"

region = "us-east-1"

encrypt = true # Enable server-side encryption

dynamodb_table = "terraform-lock-table" # For state locking

}

}

Azure Blob Storage Backend

Prerequisites:

- An Azure Storage Account (

myazuretfstateaccount) and a container (tfstate) created within it. - Appropriate Azure RBAC permissions for the identity running Terraform (e.g., "Storage Blob Data Contributor" role on the container). Azure Blob Storage provides its own locking mechanism.

Example code snippet:

terraform {

backend "azurerm" {

resource_group_name = "tfstate-rg"

storage_account_name = "myazuretfstateaccount"

container_name = "tfstate"

key = "path/to/my/terraform.tfstate"

# Authentication is typically handled via Azure CLI login, Managed Identities, or Service Principals

}

}

Google Cloud Storage (GCS) Backend

Prerequisites:

- A GCS bucket named

my-gcs-terraform-state-bucket(with object versioning enabled). - Appropriate IAM permissions for the identity running Terraform (e.g., "Storage Object Admin" on the bucket). GCS provides native state locking.

Example code snippet:

terraform {

backend "gcs" {

bucket = "my-gcs-terraform-state-bucket"

prefix = "path/to/my/states" # Optional: A prefix to organize state files within the bucket

}

}

2. Initializing Terraform with Remote Backend

After defining the backend block, run terraform init. Terraform will detect the backend configuration and prompt you to migrate any existing local state to the remote backend.

terraform init

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v5.58.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.Once the Terraform plan and apply are executed then the state is stored in the backend with outputs defined.

3. Sharing Outputs with terraform_remote_state

Once your state is stored in a remote backend, other Terraform configurations (in different directories or even different Git repositories) can access its outputs using the terraform_remote_state data source. A terraform_remote_state data source is a mechanism that allows one Terraform configuration to read the outputs from another, separately managed, Terraform configuration's state.

Essentially, it's how you tell Terraform, "Go look at the state file for that other deployment, and tell me the values of its declared outputs."

Example of using the remote state data source:

# In a separate Terraform configuration, needing outputs from the 'network' state

data "terraform_remote_state" "network" {

backend = "s3" # Must match the backend type of the remote state

config = {

bucket = "my-terraform-state-bucket"

key = "path/to/my/network-terraform.tfstate" # The specific key for the network state

region = "us-east-1"

# Include any other backend-specific configuration, e.g., dynamodb_table for S3

dynamodb_table = "terraform-lock-table"

}

}

resource "aws_instance" "app_server" {

ami = "ami-0abcdef1234567890"

instance_type = "t2.micro"

subnet_id = data.terraform_remote_state.network.outputs.private_subnet_id # Accessing an output

# ...

}

The key to the above is that the remote state snippet has the proper keys and permissions to read the other state files in the bucket.

Important Considerations for Terraform State Sharing:

- Manual Backend Management: You are responsible for creating, configuring, and managing the lifecycle of your remote backend (S3 buckets, DynamoDB tables, etc.).

- State Locking: This is CRITICAL for team collaboration. Ensure your chosen backend supports state locking, and configure it correctly (e.g., DynamoDB with S3). Without it, concurrent

terraform applyoperations can corrupt your state file. - Permissions: Carefully manage IAM/RBAC permissions to your backend storage. Use the principle of least privilege.

- Versioning: Always enable versioning on your state storage (e.g., S3 Bucket Versioning, GCS Object Versioning) to protect against accidental deletions or corruptions. This allows you to revert to a previous state file if necessary.

- Encryption: Configure encryption at rest for your state files in the cloud storage bucket. Most cloud providers offer this by default or through easy configuration.

- CI/CD Integration: When using remote backends, your CI/CD pipelines will simply need the appropriate cloud credentials to access the backend storage.

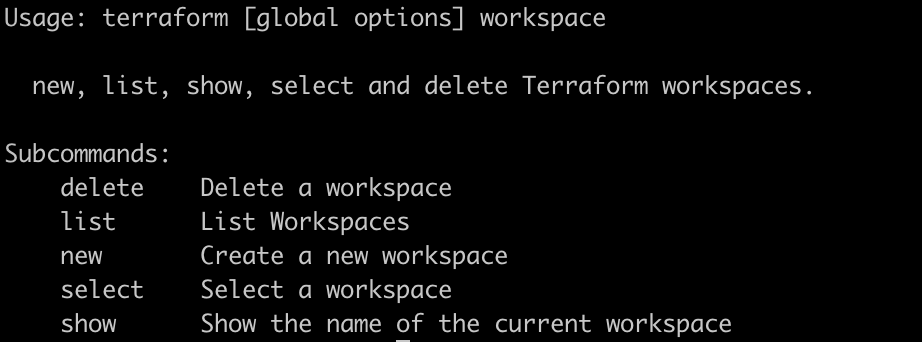

- Workspace Management: While Terraform has built-in

terraform workspacecommands, when using simple remote backends, each workspace typically corresponds to a distinct state file key within your bucket (e.g.,path/to/my/states/dev.tfstate,path/to/my/states/prod.tfstate). This is managed by Terraform automatically when you useterraform workspace new <name>.

By carefully planning and implementing these open-source backend strategies, you can achieve robust and secure Terraform state sharing for your team's collaborative infrastructure deployments.

Orchestrating Terraform Runs and State Sharing

While sharing Terraform state files via a remote backend offers a foundational level of collaboration, the true power comes when this capability is integrated into an automated workflow. The ability to programmatically consume outputs from a source state file in a dependent deployment, coupled with a CI/CD pipeline that orchestrates these operations, unlocks highly efficient and reliable infrastructure management. Imagine a scenario where a successful terraform apply for your network infrastructure automatically triggers the deployment of your compute resources, seamlessly inheriting the necessary VPC IDs or subnet details from the network state. This automated dependency management minimizes manual intervention, reduces human error, and ensures that your interconnected infrastructure components are always deployed and updated in the correct sequence, reflecting a single source of truth for your entire environment.

Example

The next question that pops up is how do I actually build this orchestration? There are many tools like Scalr and Terraform Cloud that do thsi out of the box for you, but a Github action is a simple example of doing this.

This example assumes your Terraform state is stored in an S3 bucket with DynamoDB locking, and you're using terraform_remote_state data sources.

Repo 1: network-infra

- Creates VPC, subnets, security groups.

- Outputs

vpc_idandpublic_subnet_id. - Terraform state stored in

s3://my-tf-states/prod/network/terraform.tfstate. - Repo 2:

app-servers- Creates EC2 instances, ALB.

- Uses

terraform_remote_stateto getvpc_idandpublic_subnet_idfromnetwork-infra. - Terraform state stored in

s3://my-tf-states/prod/app-servers/terraform.tfstate.

network-infra GitHub Action (.github/workflows/deploy-network.yml):

YAML

name: Deploy Network Infrastructure

on:

push:

branches:

- main

paths:

- 'network-infra/**' # Trigger only if changes are in network-infra folder

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_REGION: us-east-1 # Or read from env/config

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Setup Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.x.x # Specify your desired version

- name: Terraform Init

id: init

run: terraform init -backend-config="bucket=my-tf-states" -backend-config="key=prod/network/terraform.tfstate" -backend-config="region=${{ env.AWS_REGION }}" -backend-config="dynamodb_table=terraform-locks"

working-directory: network-infra

- name: Terraform Plan

id: plan

run: terraform plan -no-color

working-directory: network-infra

env:

TF_VAR_environment: production # Example of passing a variable

- name: Terraform Apply

id: apply

run: terraform apply -auto-approve

working-directory: network-infra

# Important: Trigger downstream deployment

- name: Trigger App Servers Deployment

if: success() # Only trigger if the network apply was successful

uses: peter-evans/repository-dispatch@v3

with:

token: ${{ secrets.PAT_TOKEN }} # Needs a PAT with repo scope to trigger

repository: your-org/app-servers # The repository containing app-servers

event-type: deploy-app-servers # A custom event type for the app-servers workflow

client-payload: '{"ref": "${{ github.ref }}", "sha": "${{ github.sha }}"}' # Optional payload

app-servers GitHub Action (.github/workflows/deploy-app-servers.yml):

YAML

name: Deploy Application Servers

on:

push:

branches:

- main

paths:

- 'app-servers/**'

repository_dispatch: # This is how the network-infra workflow triggers this one

types: [deploy-app-servers]

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_REGION: us-east-1

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Setup Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.x.x

- name: Terraform Init

id: init

run: terraform init -backend-config="bucket=my-tf-states" -backend-config="key=prod/app-servers/terraform.tfstate" -backend-config="region=${{ env.AWS_REGION }}" -backend-config="dynamodb_table=terraform-locks"

working-directory: app-servers

- name: Terraform Plan

id: plan

run: terraform plan -no-color

working-directory: app-servers

env:

TF_VAR_environment: production # Example of passing a variable

- name: Terraform Apply

id: apply

run: terraform apply -auto-approve

working-directory: app-serversWhile the above gets the job done, there is quite a bit of connecting and maintenance that needs to be done to make sure this keeps running. This is where using something like Scalr's federated environments, run triggers, and state sharing helps.

Conclusion

Terraform state is the cornerstone of effective infrastructure management with Terraform. Understanding its role, the dependencies between state files, and the various methods for secure sharing is paramount for collaborative and scalable IaC deployments. By embracing remote backends, implementing strong access controls, and leveraging platforms like Terraform Cloud or Scalr, you can ensure your Terraform state is not just managed, but managed securely and efficiently, empowering your teams to build and deploy infrastructure with confidence.

Extra Resources: